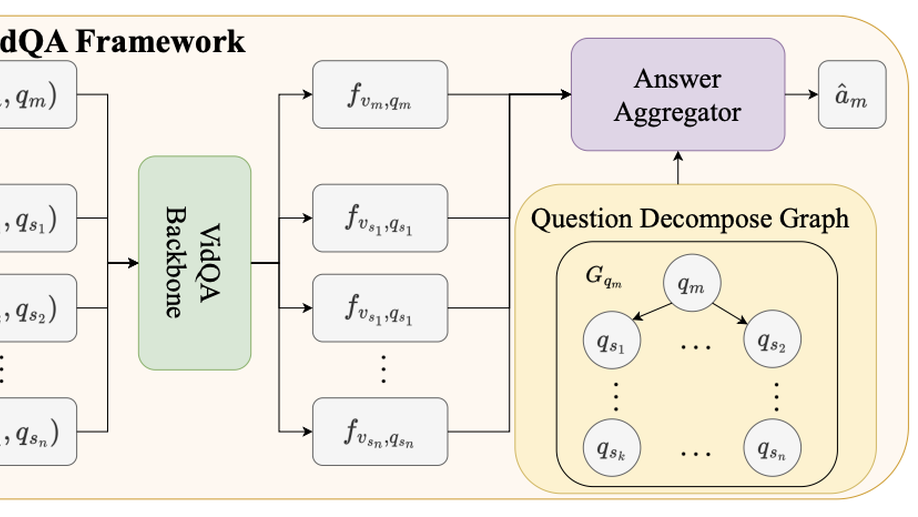

Align and Aggregate: Compositional Reasoning with Video Alignment and Answer Aggregation for Video Question-Answering

Despite the recent progress made in Video Question- Answering (VideoQA), these methods typically function as black-boxes, making it difficult to understand their reasoning processes and perform consistent compositional reasoning. To address these challenges, we propose a model-agnostic Video Alignment and Answer Aggregation (VA3) framework, which is capable of enhancing both compositional consistency and accuracy of existing VidQA methods by integrating video aligner and answer aggregator modules. The video aligner hierarchically selects the relevant video clips based on the question, while the answer aggregator deduces the answer to the question based on its sub-questions, with compositional consistency ensured by the information flow along question decomposition graph and the contrastive learning strategy. We evaluate our framework on three settings of the AGQA-Decomp dataset with three baseline methods, and propose new metrics to measure the compositional consistency of VidQA methods more comprehensively. Moreover, we propose a large language model (LLM) based automatic question decomposition pipeline to apply our framework to any VidQA dataset. We extend MSVD and NExT-QA datasets with it to evaluate our VA3 framework on broader scenarios. Extensive experiments show that our framework improves both compositional consistency and accuracy of existing methods, leading to more interpretable real-world VidQA models.

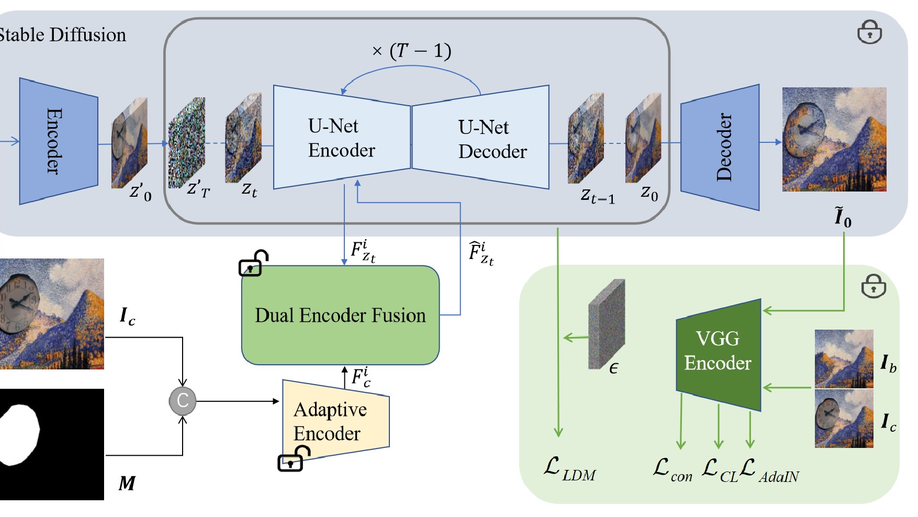

Painterly Image Harmonization using Diffusion Model

Painterly image harmonization aims to insert photographic objects into paintings and obtain artistically coherent composite images. Previous methods for this task mainly rely on inference optimization or generative adversarial network, but they are either very time-consuming or struggling at fine control of the foreground objects (e.g., texture and content details). To address these issues, we focus on feed-forward diffusion models and propose a novel Stable diffusion with Dual Encoder fusion network (SDENet), which includes a lightweight adaptive encoder and a Dual Encoder Fusion (DEF) module. Specifically, the adaptive encoder and the DEF module first stylize foreground features through aggregating relevant style information from background within each encoder. Then, the stylized foreground features from both encoders are combined to provide sufficient guidance for the harmonization process. During training, besides the noise loss in diffusion model, we additionally employ two style losses, i.e., AdaIN style loss and contrastive style loss, aiming to balance the trade-off between style migration and content preservation. Compared with the state-of-the-art models from related fields, our SDENet can stylize the foreground more sufficiently and simultaneously retain finer content on the benchmark datasets.

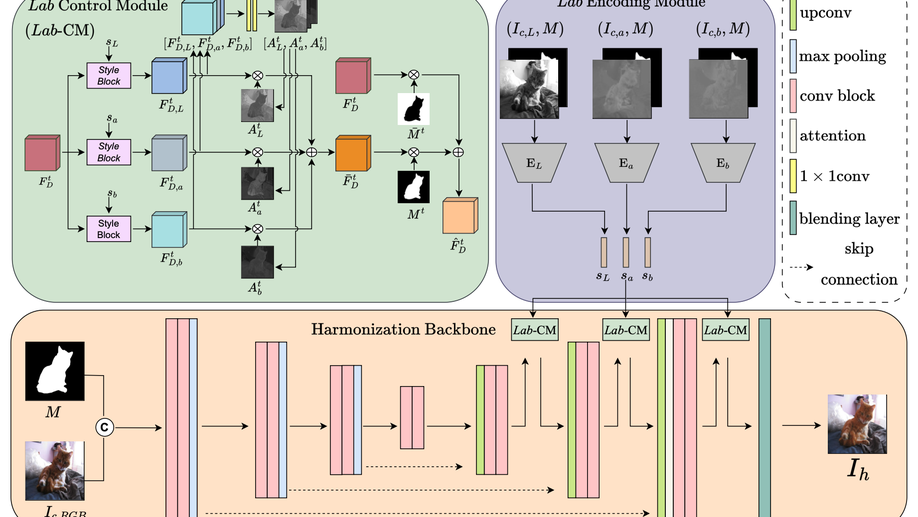

Deep Image Harmonization in Dual Color Spaces

Image harmonization is an essential step in image composition that adjusts the appearance of composite foreground to address the inconsistency between foreground and background. Existing methods primarily operate in correlated 𝑅𝐺𝐵 color space, leading to entangled features and limited representation ability. In contrast, decorrelated color space (e.g., 𝐿𝑎𝑏) has decorrelated channels that provide disentangled color and illumination statistics. In this paper, we explore image harmonization in dual color spaces, which supplements entangled 𝑅𝐺𝐵 features with disentangled 𝐿, 𝑎, 𝑏 features to alleviate the workload in harmonization process. The network comprises a 𝑅𝐺𝐵 harmonization backbone, an 𝐿𝑎𝑏 encoding module, and an 𝐿𝑎𝑏 control module. The backbone is a U-Net network translating composite image to harmonized image. Three encoders in 𝐿𝑎𝑏 encoding module extract three control codes independently from 𝐿, 𝑎, 𝑏 channels, which are used to manipulate the decoder features in harmonization backbone via 𝐿𝑎𝑏 control module. Our code and model are available at https://github.com/bcmi/DucoNet-Image-Harmonization.

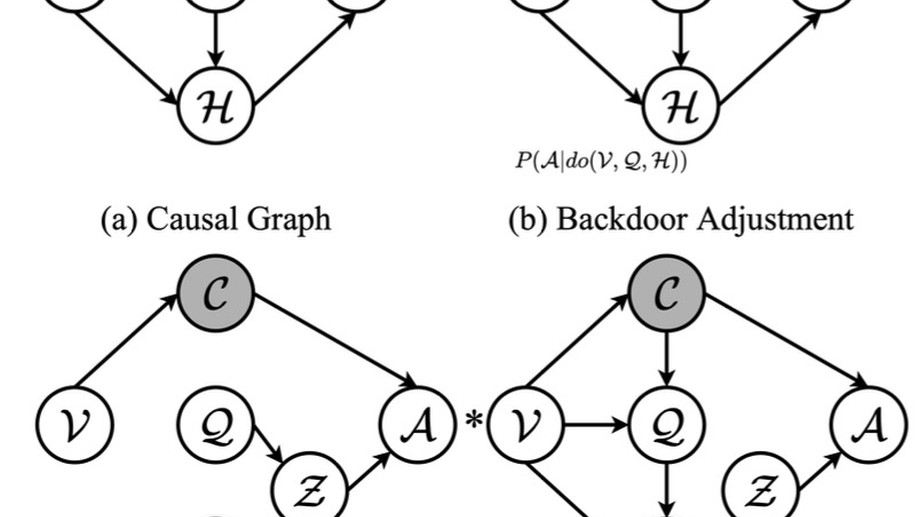

Knowledge Proxy Intervention for Deconfounded Video Question Answering

Recently, Video Question-Answering (VideoQA) has drawn more and more attention from both the industry and the research community. Despite all the success achieved by recent works, dataset bias always harmfully misleads current methods focusing on spurious correlations in training data. To analyze the effects of dataset bias, we frame the VideoQA pipeline into a causal graph, which shows the causalities among video, question, aligned feature between video and question, answer, and underlying confounder. Through the causal graph, we prove that the confounder and the backdoor path lead to spurious causality. To tackle the challenge that the confounder in VideoQA is unobserved and non-enumerable in general, we propose a model-agnostic framework called Knowledge Proxy Intervention (KPI), which introduces an extra knowledge proxy variable in the causal graph to cut the backdoor path and remove the effect of confounder. Our KPI framework exploits the front-door adjustment, which requires no prior knowledge about the confounder. The effectiveness of our KPI framework is corroborated by three baseline methods on five benchmark datasets, including MSVD-QA, MSRVTT-QA, TGIF-QA, NExT-QA, and Causal-VidQA.

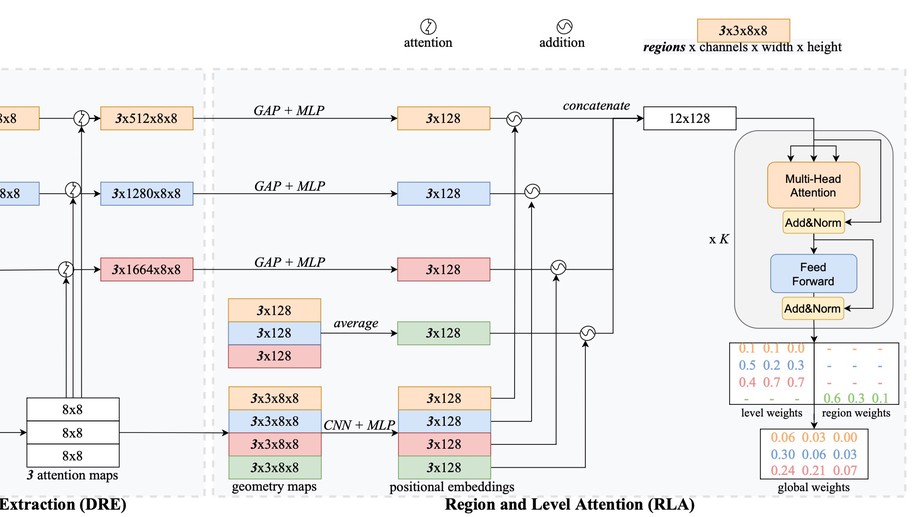

Multi-Level Region Matching for Fine-Grained Sketch-Based Image Retrieval

Fine-Grained Sketch-Based Image Retrieval (FG-SBIR) is to use free-hand sketches as queries to perform instance-level retrieval in an image gallery. Existing works usually leverage only high-level information and perform matching in a single region. However, both low-level and high-level information are helpful to establish fine-grained correspondence. Besides, we argue that matching different regions between each sketch-image pair can further boost model robustness. Therefore, we propose Multi-Level Region Matching (MLRM) for FG-SBIR, which consists of two modules: a Discriminative Region Extraction module (DRE) and a Region and Level Attention module (RLA). In DRE, we propose Light-weighted Attention Map Augmentation (LAMA) to extract local feature from different regions. In RLA, we propose a transformer-based attentive matching module to learn attention weights to explore different importance from different image/sketch regions and feature levels. Furthermore, to ensure that the geometrical and semantic distinctiveness is well modeled, we also explore a novel LAMA overlapping penalty and a local region-negative triplet loss in our proposed MLRM method. Comprehensive experiments conducted on five datasets (i.e., Sketchy, QMUL-ChairV2, QMUL-ShoeV2, QMUL-Chair, QMUL-Shoe) demonstrate effectiveness of our method.

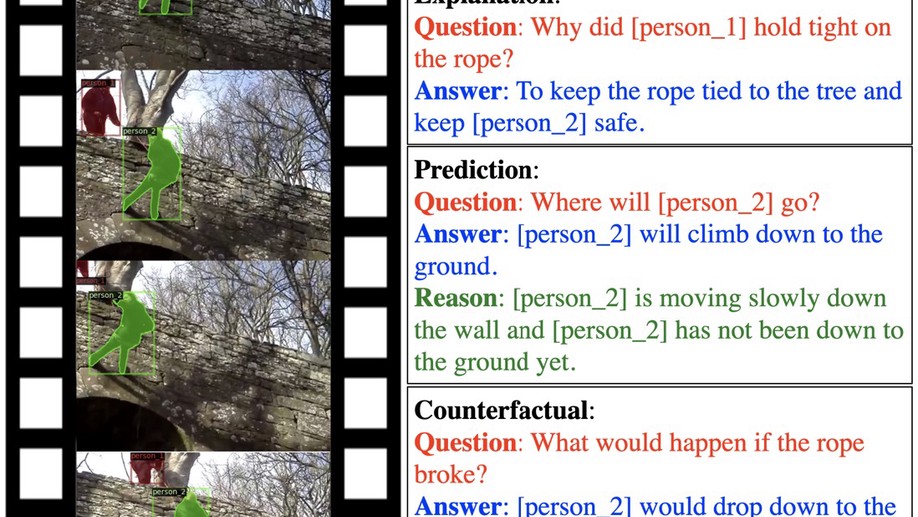

From Representation to Reasoning: Towards both Evidence and Commonsense Reasoning for Video Question-Answering

Video understanding has achieved great success in representation learning, such as video caption, video object grounding, and video descriptive question-answer. However, current methods still struggle on video reasoning, including evidence reasoning and commonsense reasoning. To facilitate deeper video understanding towards video reasoning, we present the task of Causal-VidQA, which includes four types of questions ranging from scene description (description) to evidence reasoning (explanation) and commonsense reasoning (prediction and counterfactual). For commonsense reasoning, we set up a two-step solution by answering the question and providing a proper reason. Through extensive experiments on existing VideoQA methods, we find that the state-of-the-art methods are strong in descriptions but weak in reasoning. We hope that Causal-VidQA can guide the research of video under- standing from representation learning to deeper reasoning. The dataset and related resources are available at https: //github.com/bcmi/Causal-VidQA.git.

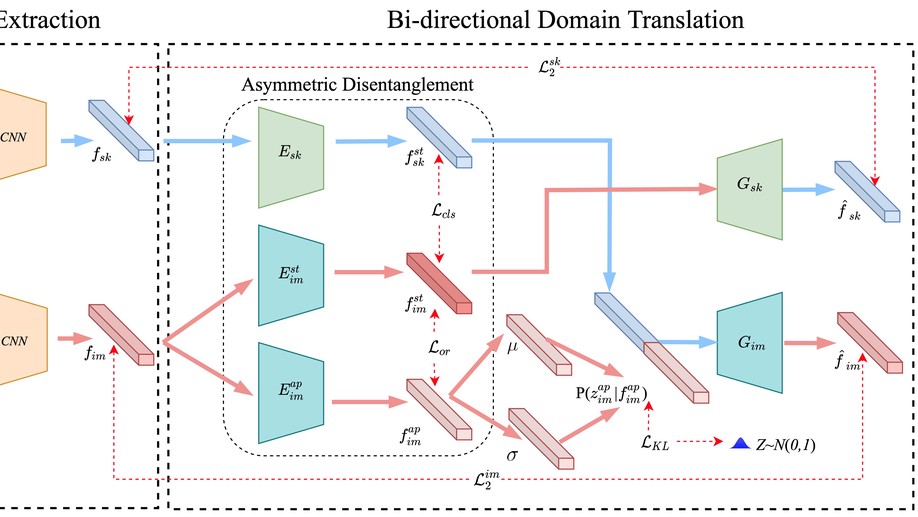

Zero-Shot Sketch-Based Image Retrieval with Structure-aware Asymmetric Disentanglement

The goal of Sketch-Based Image Retrieval (SBIR) is using free-hand sketches to retrieve images of the same category from a natural image gallery. However, SBIR requires all test categories to be seen during training, which cannot be guaranteed in real-world applications. So we investigate more challenging Zero-Shot SBIR (ZS-SBIR), in which test categories do not appear in the training stage. After realizing that sketches mainly contain structure information while images contain additional appearance information, we attempt to achieve structure-aware retrieval via asymmetric disentanglement. For this purpose, we propose our STRucture-aware Asymmetric Disentanglement (STRAD) method, in which image features are disentangled into structure features and appearance features while sketch features are only projected to structure space. Through disentangling structure and appearance space, bi-directional domain translation is performed between the sketch domain and the image domain. Extensive experiments demonstrate that our STRAD method remarkably outperforms state-of-the-art methods on three large-scale benchmark datasets.

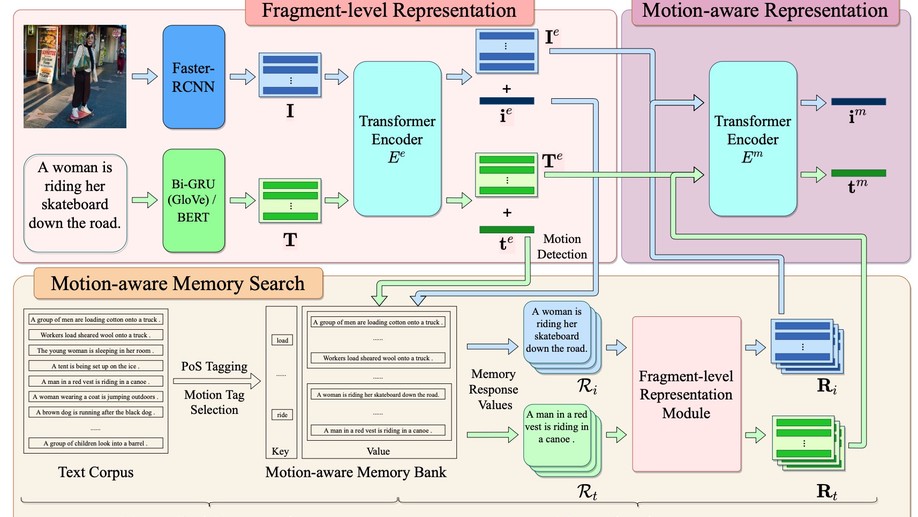

Action-Aware Embedding Enhancement for Image-Text Retrieval

Image-text retrieval plays a central role in bridging vision and language, which aims to reduce the semantic discrepancy between images and texts. Most of existing works rely on refined words and objects representation through the data-oriented method to capture the word-object cooccurrence. Such approaches are prone to ignore the asymmetric action relation between images and texts, that is, the text has explicit action representation (i.e., verb phrase) while the image only contains implicit action information. In this paper, we propose Action-aware Memory-Enhanced embedding (AME) method for image-text retrieval, which aims to emphasize the action information when mapping the images and texts into a shared embedding space. Specifically, we integrate action prediction along with a action-aware memory bank to enrich the image and text features with action-similar text features. The effectiveness of our proposed AME method is verified by comprehensive experimental results on two benchmark datasets.

Memorize, Associate and Match: Embedding Enhancement via Fine-Grained Alignment for Image-Text Retrieval,

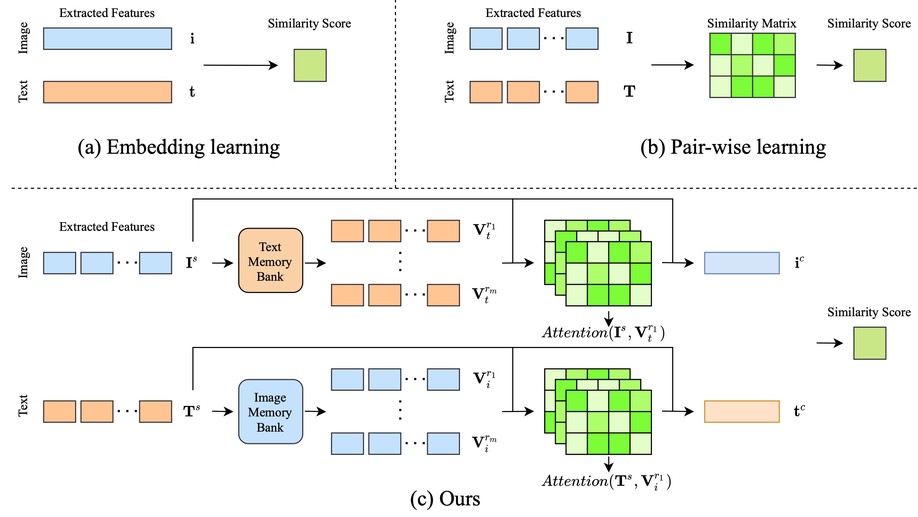

Image-text retrieval aims to capture the semantic correlation between images and texts. Existing image-text retrieval methods can be roughly categorized into embedding learning paradigm and pair-wise learning paradigm. The former paradigm fails to capture the fine-grained correspondence between images and texts. The latter paradigm achieves fine-grained alignment between regions and words, but the high cost of pair-wise computation leads to slow retrieval speed. In this paper, we propose a novel method named MEMBER by using Memory-based EMBedding Enhancement for image-text Retrieval (MEMBER), which introduces global memory banks to enable fine-grained alignment and fusion in embedding learning paradigm. Specifically, we enrich image (resp., text) features with relevant text (resp., image) features stored in the text (resp., image) memory bank. In this way, our model not only accom- plishes mutual embedding enhancement across two modalities, but also maintains the retrieval efficiency. Extensive experiments demonstrate that our MEMBER remarkably outperforms state- of-the-art approaches on two large-scale benchmark datasets.

Video Semantic Segmentation via Sparse Temporal Transformer,

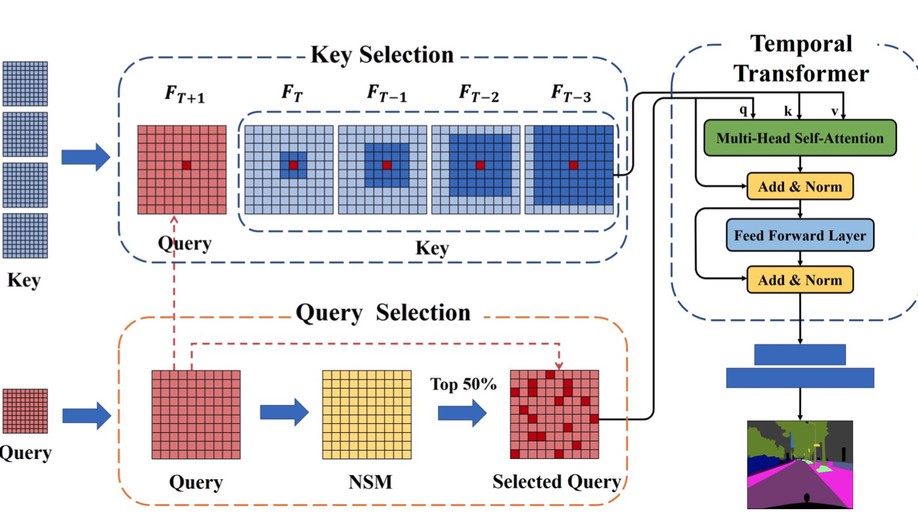

Currently, video semantic segmentation mainly faces two challenges: 1) the demand of temporal consistency; 2) the balance between segmentation accuracy and inference efficiency. For the first challenge, existing methods usually use optical flow to capture the temporal relation in consecutive frames and maintain the temporal consistency, but the low inference speed by means of optical flow limits the real-time applications. For the second challenge, flow-based key frame warping is one mainstream solution. However, the unbalanced inference latency of flow-based key frame warping makes it unsatisfactory for real-time applications. Considering the segmentation accuracy and inference efficiency, we propose a novel Sparse Temporal Transformer (STT) to bridge temporal relation among video frames adaptively, which is also equipped with query selection and key selection. The key selection and query selection strategies are separately applied to filter out temporal and spatial redundancy in our temporal transformer. Specifically, our STT can reduce the time complexity of temporal transformer by a large margin without harming the segmentation accuracy and temporal consistency. Experiments on two benchmark datasets, Cityscapes and Camvid, demonstrate that our method achieves the state-of-the-art segmentation accuracy and temporal consistency with comparable inference speed.

Activity image-to-video retrieval by disentangling appearance and motion

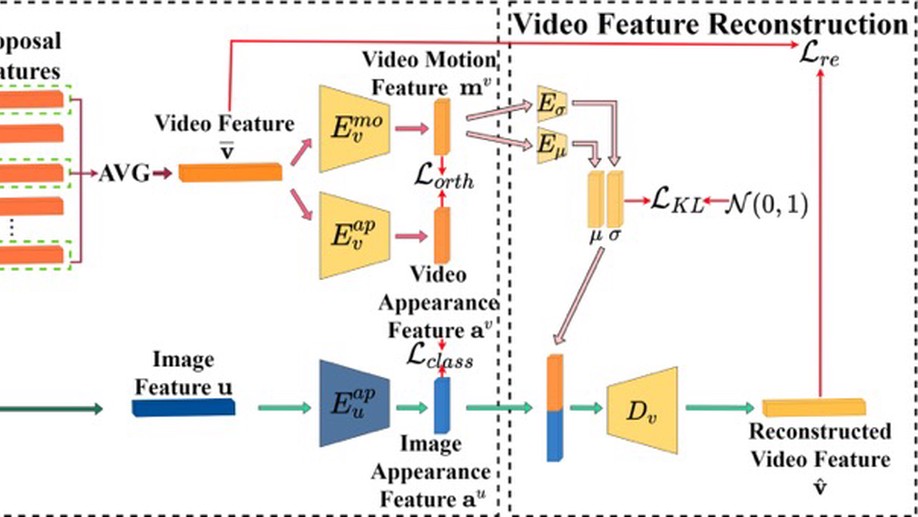

With the rapid emergence of video data, image-to-video retrieval has attracted much attention. There are two types of image-to-video retrieval: instance-based and activity-based. The former task aims to retrieve videos containing the same main objects as the query image, while the latter focuses on finding the similar activity. Since dynamic information plays a significant role in the video, we pay attention to the latter task to explore the motion relation between images and videos. In this paper, we propose a Motion-assisted Activity Proposal-based Image-to-Video Retrieval (MAP-IVR) approach to disentangle the video features into motion features and appearance features and obtain appearance features from the images. Then, we perform image-to-video translation to improve the disentanglement quality. The retrieval is performed in both appearance and video feature spaces. Extensive experiments demonstrate that our MAP-IVR approach remarkably outperforms the state-of-the-art approaches on two benchmark activity-based video datasets.

Lattice-Based Transformer Encoder for Neural Machine Translation,

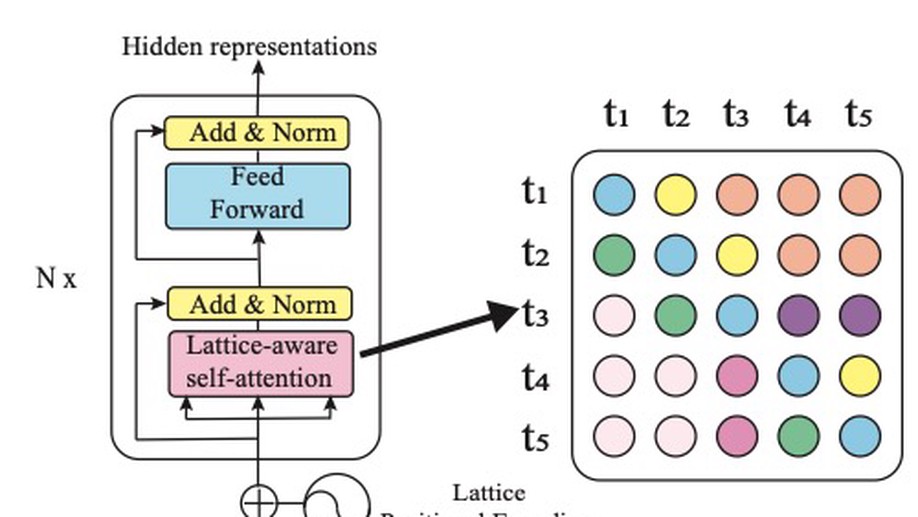

Neural machine translation (NMT) takes deterministic sequences for source representations. However, either word-level or subword-level segmentations have multiple choices to split a source sequence with different word segmentors or different subword vocabulary sizes. We hypothesize that the diversity in segmentations may affect the NMT performance. To integrate different segmentations with the state-of-the-art NMT model, Transformer, we propose lattice-based encoders to explore effective word or subword representation in an automatic way during training. We propose two methods: 1) lattice positional encoding and 2) lattice-aware self-attention. These two methods can be used together and show complementary to each other to further improve translation performance. Experiment results show superiorities of lattice-based encoders in word-level and subword-level representations over conventional Transformer encoder.

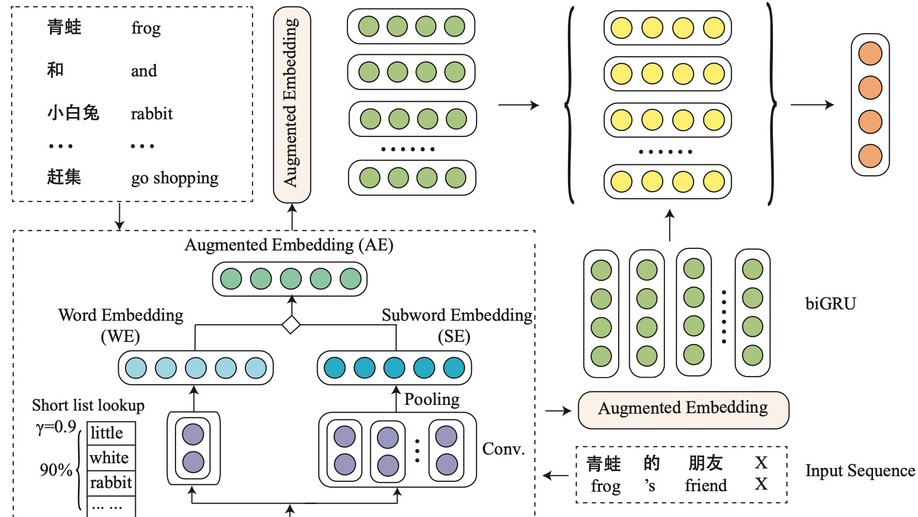

Effective Subword Segmentation for Text Comprehension

Representation learning is the foundation of machine reading comprehension and inference. In state-of-the-art models, character-level representations have been broadly adopted to alleviate the problem of effectively representing rare or complex words. However, character itself is not a natural minimal linguistic unit for representation or word embedding composing due to ignoring the linguistic coherence of consecutive characters inside word. This paper presents a general subword-augmented embedding framework for learning and composing computationally-derived subword-level representations. We survey a series of unsupervised segmentation methods for subword acquisition and different subword-augmented strategies for text understanding, showing that subword-augmented embedding significantly improves our baselines in various types of text understanding tasks on both English and Chinese benchmarks.

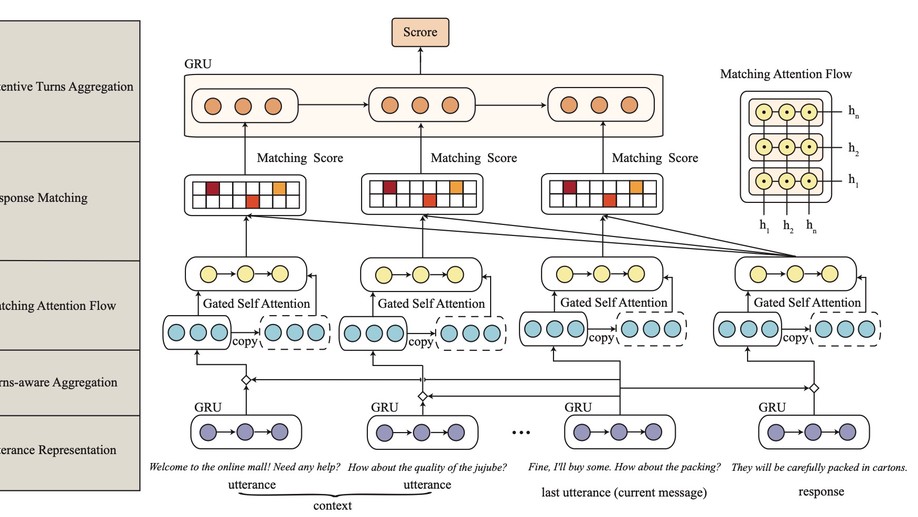

Modeling Multi-turn Conversation with Deep Utterance Aggregation

Multi-turn conversation understanding is a major challenge for building intelligent dialogue systems. This work focuses on retrieval-based response matching for multi-turn conversation whose related work simply concatenates the conversation utterances, ignoring the interactions among previous utterances for context modeling. In this paper, we formulate previous utterances into context using a proposed deep utterance aggregation model to form a fine-grained context representation. In detail, a self-matching attention is first introduced to route the vital information in each utterance. Then the model matches a response with each refined utterance and the final matching score is obtained after attentive turns aggregation. Experimental results show our model outperforms the state-of-the-art methods on three multi-turn conversation benchmarks, including a newly introduced e-commerce dialogue corpus.